[German]Facebook recently changed its strategy regarding policy violations. With a lot of prose, users are supposed to be "educated" on policy violations in order to avoid such things – this is supposed to prevent the previous "blocks". Since "Facebook is broken by design", the "training" is simply "white salve" and an attempt to save what cannot be saved. And for me, it's a great opportunity to outline how broken Facebook and its AI algorithms for content control are. And it is a prime example to show that these "filters", which are also always brought into play by the EU Commission & Co. to control internet content, are failing and that AI is dragging people into the abyss.

[German]Facebook recently changed its strategy regarding policy violations. With a lot of prose, users are supposed to be "educated" on policy violations in order to avoid such things – this is supposed to prevent the previous "blocks". Since "Facebook is broken by design", the "training" is simply "white salve" and an attempt to save what cannot be saved. And for me, it's a great opportunity to outline how broken Facebook and its AI algorithms for content control are. And it is a prime example to show that these "filters", which are also always brought into play by the EU Commission & Co. to control internet content, are failing and that AI is dragging people into the abyss.

Facebook and it's content moderation

Facebook as a platform is subject to numerous legal restrictions and is forced to filter content and remove posts if necessary. This is understandable. Anyone who repeatedly violates the community guidelines will be blocked at some point (initially for 30 days, later permanently). So far so familiar. The platform also relies on algorithms for rating and filtering. And teams of moderators work in the background to evaluate potential violations and inconsistencies and, if necessary, lift bans. Now Facebook is "thinking ahead".

Training Community Guidelines for violations

It was a German article that immediately caught my attention. The message was: Facebook has changed its strategy on how to act to users policy violations. Users who violates the community standards, as it's called in Facebook-speak, can learn their way out as a creator. The Facebook post is available here – sounds good, but let's reflect it on reality.

The fight against Facebook AI

Yes, I'm a content creator on Facebook because I link to my blog posts and enrich this link with a little teaser text. On Facebook, I reach thousands of administrators in various IT groups and I sometimes receive tips about issues. It could all be fine – and I'm even verified as a journalist on Facebook – I don't appear there privately and I wouldn't have a private account there either.

Unfortunately, at some point Facebook succumbed to the call of artificial intelligence and had the posts checked, evaluated and, if necessary, blocked by an AI. And that's where I regularly come into conflict with Facebook. Whenever I share posts from my profile in several IT groups, the AI seems to jump in and take a look.

I blogged about the AnyDesk incident in February 2024 (see e.g. AnyDesk: They have signed the client with an old certificate (April 24, 2024)) and shared the articles on Facebook – it's relevant for IT people, after all. The Facebook AI promptly recognizes a problem and blocks the post because "malware is being spread and community standards have been violated".

I now have this hamster wheel every few days, especially when it comes to post about security incidents or topics. The fact that such posts on Facebook are followed by numerous bots with comments promising to retrieve hacked accounts is just the tip of the iceberg (or broken by design – I report the bots, delete the comments and block them from my profile).

The usual way is then also: I am informed about the blocking or deletion of the post and Facebook gives me the opportunity to lodge a standardized objection. There are two or three dialog boxes with selection fields explaining why it is not an infringement.

- Stupidy #1: I actually have better things to do than tell the stupid Facebook machinery "Guys, you've made a mistake again, correct it".

- Stupidy #2: And that's doubly stupid or broken by design – in the AnyDesk case, several posts shared in various groups were blocked – making a total of around 28 "violations".

Regarding Stupidy #2: I have complained about three or four blocked posts – but the moderation team processes exactly these reports. The fact that other posts are blocked there is of no interest or is not shown to people. As a result, some security messages are floating around in my profile as "blocked due to violation of the community standard". Ok, if Facebook is just stupid, you can't change that.

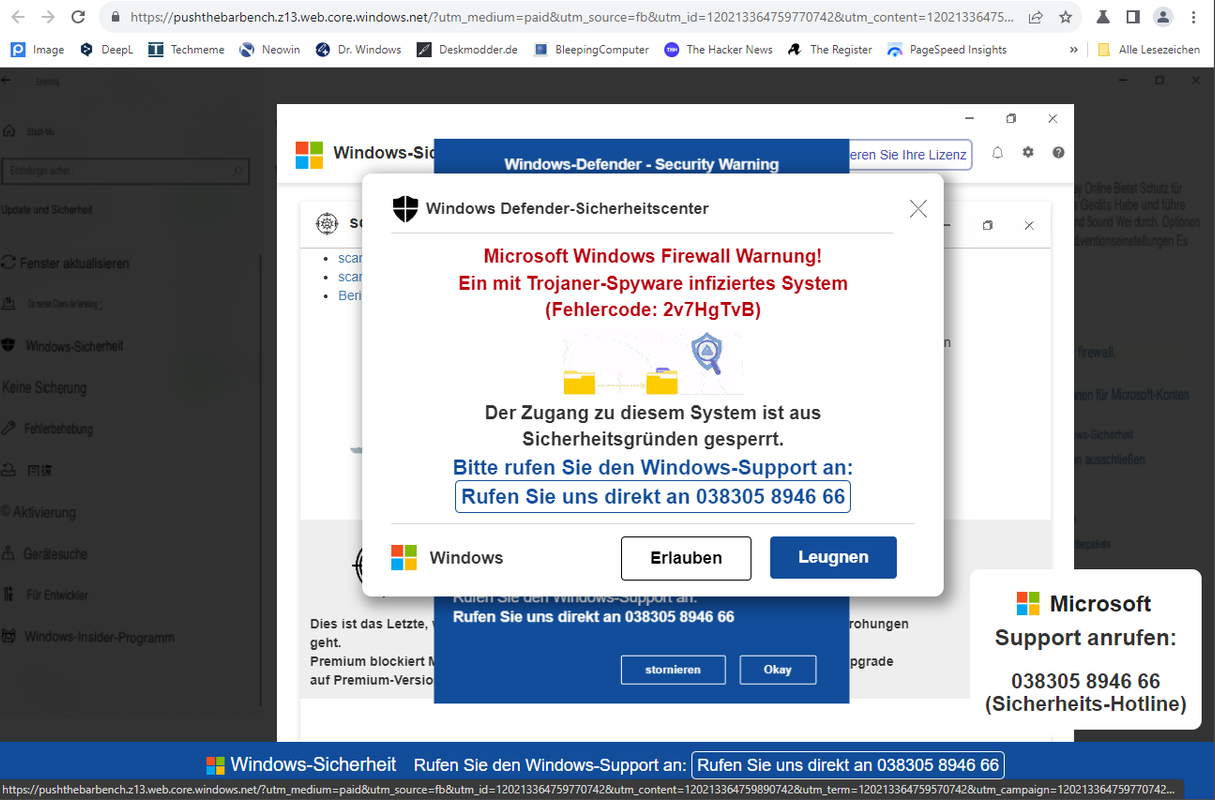

The escalation: Blocked after addressing a FB ad with TechScam

Last week, I came across a techncal scam campaign using ads placed and played on Facebook. I got such such an ad visiting my Facebook profile – an I wrote about the incident in the blog post Scam-Warning: Fake Trojan alert (here shown via Facebook ads) and sent a message to Facebook support on Saturday with a link to my post above.

Fake Trojan alert via Facebook ads; click to zoom

The naive person thinks "hmm, Facebook support is looking into it", because the ID of the ad placed by the scammer can be seen in the screenshot. The realist whispers "could have saved your breath, nothing is happening". On Monday morning, I am greeted by a scam ad on the Facebook page.

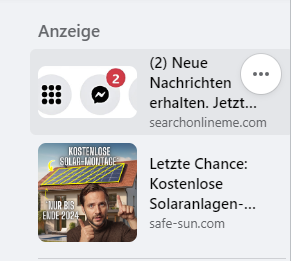

The screenshot above shows the tear-out of the page – in the "Ad" section there is a graphic showing Facebook Messenger and claiming that I have received two messages. When I saw this, I realized that this was the scam ad mentioned above. If you click on the supposed notification, the fake Trojan warning appears in the browser. Facebook continues to deliver the scam campaign.

What does the "Facebook content creator" do at this moment? He writes a short Facebook post of the type "Hello, if you see the above message in Facebook profile, ignore it, this is tech scam suggesting a fake Trojan warning." to his "followers". Here is the text of the warning, which I have garnished with the image above.

Just for the record: You may see the following display in the top right-hand corner of Facebook. It looks as if two Messenger messages have arrived. If you click on the ad, you will suddenly receive a pop-up with a fake Trojan warning and the request to "call Microsoft Support". The scam has been known for years as a tech support scam.

I already documented the case in the article on Saturday and also reported it to Facebook support. FB doesn't seem to care that fake ads from scammers happen – after all, FB collects money. Instead, their AI likes to block posts from me "as a violation of community standards". Well done …

I then shared the post from my profile on my Facebook page and in two groups (on IT security) – after all, I want to warn people.

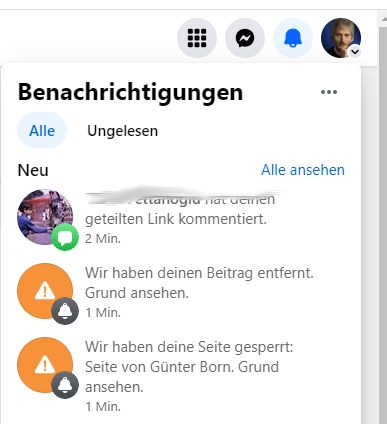

As Facebook writes in it's announcement cited above: We all make mistakes … Seconds after my posts, I had the above two notifications from Facebook in my profile. The first message informed me that my post, which was automatically shared on my Facebook page, had been deleted – the reason given was a violation of community standards. And the second message informed me that my Facebook page had been blocked. All messages are in Germany, due to my Facebook profile settings.

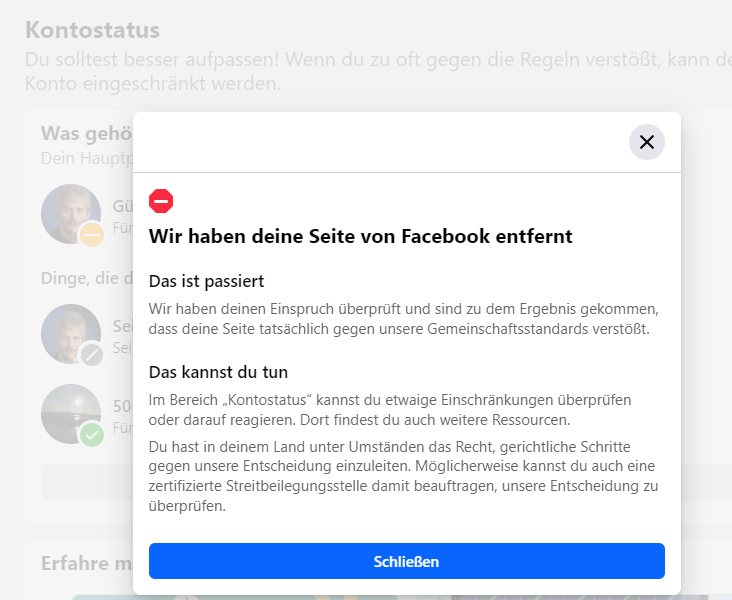

Well, I only had 200 followers on my Facebook page. Anyway – it was an attempt to inform people who weren't in my profile about new posts. I wasn't aware of any guilt – a warning about tech scams is not a violation of community standards – unless you postulate that Facebook deliberately delivers these ads because they get paid for it.

Ergo, I filed an objection to this AI decision via the standardized Facebook dialogs. It was then hoping, that it will be reviewed by a human moderator. My thought was: "If someone with a brain is sitting there, they will take back the block" – but I hadn't factored in Einstein's theorem about the infinity of the universe and human stupidity. A minute later, I received the above notification – my Facebook page was finally blocked.

I've simply deleted my Facebook page – it's useless, I've informed my followers on FB and will only post on the platform to a very limited extent, if at all. The Creator is gone – and a sack of rice has fallen over in China – I have no illusions about that.

In the meantime, I have feedback from other Facebook users whose paid IT job ads are being blocked as "scam", while Messenger is flushing countless Meta Business Support136266362 requests at people.

Final thoughts: FB is broken, AI is broken

The case outlined above is wonderful proof of the thesis that all the content filters that are demanded, tested and used simply don't work. There is collateral damage, one after the other. And it is a great example of how AI simply fails colossally at what it should actually be good at (according to marketing).

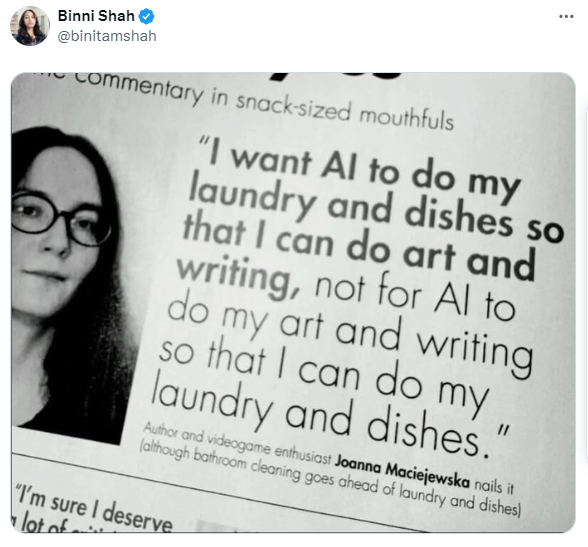

I'll end my rant with a wonderful tweet from Binni Shah, which I came across recently and which hits the nail on the head. It's about what someone really wants from this AI:

The statement is: I want this AI to do my laundry and wash the dishes so that I can devote myself to writing and art. Instead, people dump AI at my feet that promises to take over my writing and the art I create. I'm then left to do the dishes and the laundry. A classic mismatch, I would say.

Your rant is justified, Facebook is broken and flawed by design and possibly on it's way to the Dead Internet…